Understanding Production: What can you measure?

Production is everything. If your software doesn’t perform in production, it doesn’t perform. Thankfully there’s a range of information that you can measure and monitor that helps you understand your production system and solve any issues that may arise. But where do you start and what should you monitor and measure? What are the tradeoffs between different approaches?

There’s plenty of blog posts out there that simply tell you about one approach and how it works, this blog post solves that problem since it summarises the different types of performance related information that you can gather from a production environment. It looks at how they can help you and what the downsides and limitations of each category is.

Production monitoring differs a lot from other developer tools because if these monitoring tools add overhead to your system and slow it down they can become the source of performance problems themselves. For example you can’t just use the regular debugger from your IDE on a production system without slowing it down. At Opsian we’ve profiled a lot of production systems and often see situations where monitoring tools are the biggest overhead.

Metrics / Telemetry

A metric is just a number that tells you something about the system. For example - what percentage of your CPU time is used rather than idle over the last second? Normally metrics are recorded at regular intervals, tracked and stored for the purpose of making historical comparisons. For example - Users report the system being slow - how does your CPU usage differ from before that period?

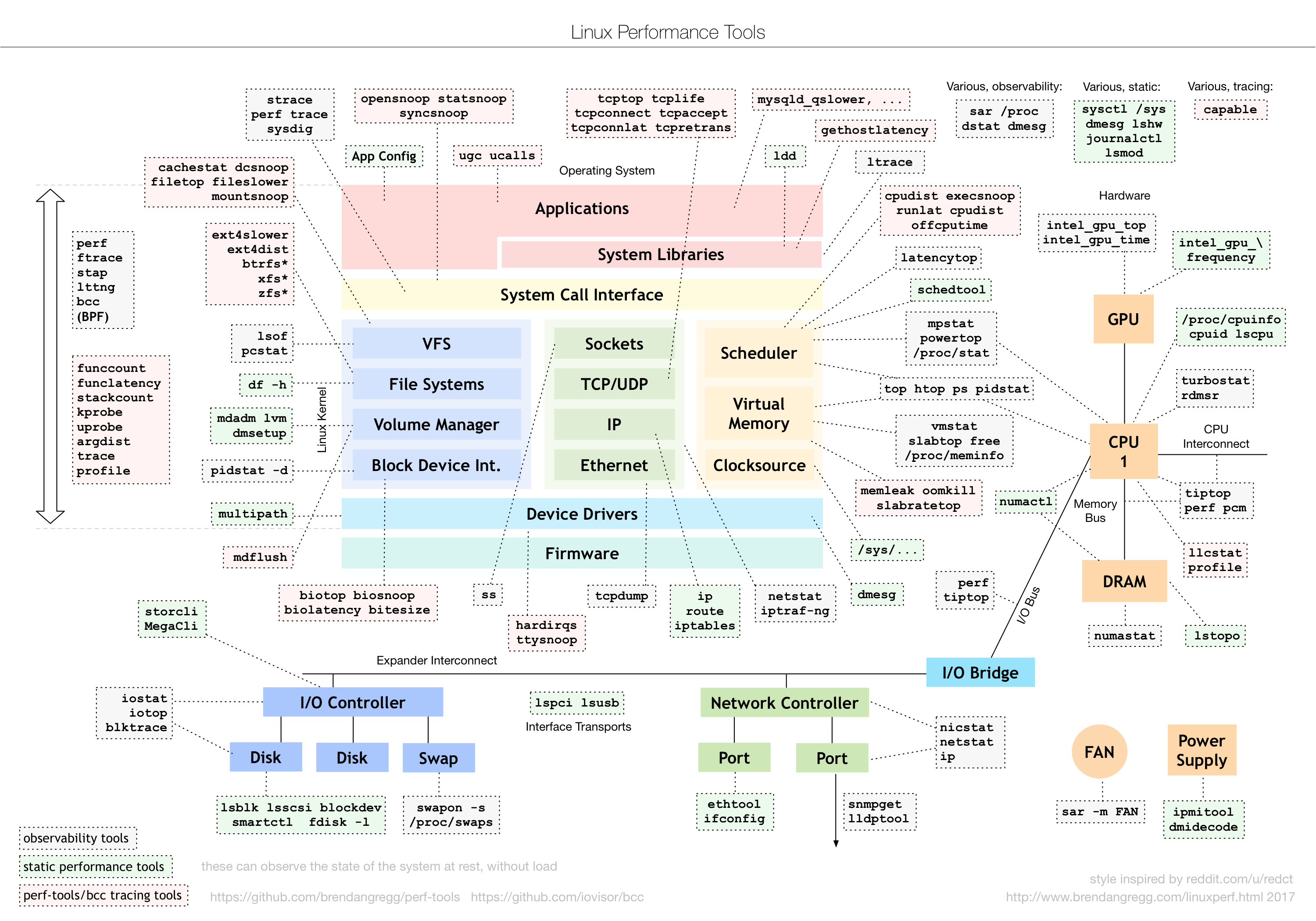

There are almost a limitless number of metrics that can be gathered from a production system and each one can tell us a little bit about a different part of the puzzle. Brendan’s Greggs website even has a great picture about what linux tools can be used to inspect different components:

Hardware Metrics

These can often help you identify and understand whether you’re currently bottlenecked on CPU or on an IO operation. It’s no use trying to trying to optimise your CPU usage if the real problem is that your network connection is saturated. Brendan Gregg’s USE method is often helpful in making practical sense of these hardware metrics.

Software System Metrics

Software Metrics can also be helpful for understanding performance problems. For example measuring how much time your application spends in stop the world GC pauses.

Business Metrics

These are also of key importance to understanding whether you have a performance problem or not. Keeping track of a latency histogram of user requests gives you an insight into how your customers experience your website. Tracking operational latency can be even more valuable in certain problem domains such as High Frequency Trading systems or online Advertising auctions.

Metrics provide practical insight into production systems because they are computationally cheap to collect. They don’t have much overhead and most don’t slow the production system down.

There’s plenty of open source tooling that enables the capture, storage, query and display of metrics. Prometheus specialises in having a powerful query language that can operate on time series data and which integrates into it’s alert-manager system in a very sophisticated manner. Grafana is a dashboard and storage mechanism for visualising what is happening with time series metrics.

Of course, just because you can measure something doesn’t mean that you need to, or should! Measuring every possible metric under the sun without having a way of understand how they fit together or what methodology you should apply when diagnosing things can lead to murder mystery style debugging sessions where you point to each metric in turn blaming it for the problem until you find something. It’s not as fun as it sounds.

But metrics aren’t the solution to all of life’s problems though. Principly they aren’t directly actionable. For example they may tell you that you’re CPU bottlenecked, but not where in your application code that bottleneck lies. They may tell you that you’re sitting around idle and not doing much, but not that it’s because your process is sitting around waiting on an external service.

Logs

A log is just a series of events that have been recorded - often in text but sometimes in a more sophisticated binary format. In this section we’ll look at both Garbage Collection logs and also custom logs.

Oracle and OpenJDK both produce logs of garbage collection events (GC Logs) that can provide insight into the GC subsystem, you need to use the following command-line option in order to enable GC logging:

-Xlog:gc*:file=<gc-log-file-path> # Java 9 or later

-XX:+PrintGCDetails -Xloggc:gclog.log # Java 8 or earlier

GC Logs are a mine of information about what your GC is doing. They can tell you when the system is paused, the allocation rates, how long each individual GC took, information the sizes and usage of different heaps within your system. Switching GC Logging on in production is also very low overhead. Of course - the big limitation of GC Logs is that they are only really useful in understanding GC problems.

Another common method for exposing performance information about operations from production is to log performance related events. For example the time that each request took or specific operations in an eventloop that took longer than a given time budget. These logging operations could stored using an existing simple logging system like log4j/slf4j/logback or could be exposed with a smarter structured logging system.

Event logs have the advantage they can be totally customised and contain lots of contextual information. For example they could store which user was logged in or information relevant to your problem domain. Since many systems have production logging not just for performance but also debugging, logging performance related information can be an easy addition.

There are two big problems with logging of production events. Firstly you need to add this logging code yourself which takes developer team time in order to write and maintain. Secondly as you scale your system up the event logging system can produce a vast quantity of data which can become a bottleneck in and of itself.

Instrumentation

Instrumentation is the process of adding extra code at runtime to an existing codebase in order to measure timings of different operations. For example you might take the controller method in a web application - instrument the start and end of the method to measure how long the method took.

Instrumentation can be automated by running a Java or JVMTI agent in your production system that modifies the bytecode that the JVM runs, weaving instrumentation code in. This makes an instrumentation based system easy to integrate - many Application Performance Monitoring tools take this approach to provide a good out-of-the-box experience. Just attaching the agent at startup weaves in the necessary monitoring code.

The problem with an instrumentation based approach is that the more instrumentation code you add - the bigger the overhead. So there’s a limit to how fine grained you can make the instrumentation, for example instrumenting every method in a program in order to identify how long they run can slow the application down by 2-3 times. Overhead matters here because this is a production environment. Often instrumentation based approaches focus on big picture issues such as instrumenting web service requests or communication patterns between microservices.

The other big limitation to instrumentation based approaches is that they can only instrument what the author of the instrumentation agent thought about ahead of time. For example at Opsian we had a customer with an HTTP endpoint that would be slow every 5 seconds, with individual requests taking 2-3 seconds. They were using an instrumentation based APM tool which didn’t tell them why they were slow and even denied that there were any slow requests! The problem was that they were instrumenting the request at the Servlet API level. In actual fact the problem was that the customer’s Tomcat configuration expired its resources cache every 5 seconds and on load one resource was trying to scan the entire classpath - causing a multisecond reload.

You may write this off as a single, isolated, issue with instrumentation based tools but there’s a general principle here. If you’re not instrumenting the right thing up front, ahead of time, then you won’t spot it when a problem happens.

Continuous Profiling

Profiling is measuring what part of your application is consuming a particular resource. For example, you could execution profile in order to identify what methods are consuming CPU time. You could memory profile in order to understand what lines of code allocate the most objects. You can even profile more esoteric hardware functions, for example where are your CPU Cache misses coming from?

Continuous Profiling is the ability to get always-on profiling data from production into the hands of developers quickly and usably. This entails being able to aggregate and understand that data from a production system in a monitoring tool in a similar way to existing APM tools do with instrumentation and metrics.

Profiling requires no integration by developers and can be done automatically for an entire platform. For example at Opsian we profile any JVM based application no matter what programming language or framework is used. It doesn’t make any assumptions about the location of problems in the same way that a framework specific instrumentation approach would do.

The major downside of continuous profiling has been that most traditional profilers are just too slow to use in production. Tools like JVisualVM or hprof just have too much overhead due to the way that they are implemented. They’re often impractical to use in a production setting. For example they can’t aggregate over a fleet of microservice JVMs - they’re focused on being used on a single process at a time.

However there are a new breed of more efficient JVM profilers that have low

enough overhead to be used in production - for example Opsian, Async

Profiler or

Java Mission Control.

These profilers use Operating System signal handlers in order to time their

collection of profiling related data and can use the JVM’s internal

AsyncGetCallTrace based API and/or the Linux

perf system. At Opsian we’ve also

solved the practical problems around aggregating your data across different

JVMs and understanding historical and time based profiling data.

In 2018 continuous profiling isn’t that common an approach for understanding performance problems due to the tooling issues mentioned above, but it is growing in popularity.

Conclusion

At the end of the day different approaches for gathering information help solve different use cases. They all have their sweet spots, their pros and their cons.

Metrics can be used to identify if a problem exists and isolate a specific subsystem from the big picture. Logs can be used for GC and highly customisable information events. Code Instrumentation based approaches are useful for tracking requests across systems. They can also be used with agents to automatically gathering information, minimising the overhead of setting up and installing the monitoring. Continuous Profiling provides a complete understanding of what’s going on - right down to the line of code.

If you’ve read through this blog this far then we hope you’ve found something of value. Whilst many articles cover these different topics separately here you’ve learned about how this approaches compare with each other head to head.

So many production systems only implement one or two of these approaches and as a result can encounter downtime or performance issues that leave a bad taste in your customer’s mouths. Being able to fix problems in a rapid and agile fashion is enabled by being able to measure and understand what a system is doing in production.

Richard Warburton

&

Richard Warburton

&

Sadiq Jaffer

Sadiq Jaffer