Why Java's TLABs are so important and why write contention is a performance killer in multicore environments

The JVM’s garbage collectors make use of Thread-Local Allocation Buffers (TLABs) to improve allocation performance. In this article we’re going to understand what TLABs are, how they affect the code generated by the JIT for allocation and what the resulting effect on performance is. Along the way we’ll learn about the organisation of CPU caches and how multiple CPU cores keep their contents coherent and how you can use this knowledge to avoid multithreaded scalability bottlenecks.

Let’s begin by discussing what TLABs are and why they’re necessary. TLABs are blocks of the Java heap that each JVM thread has into which it can allocate. They are exclusive to one thread for the purposes of allocation but as they are part of the Java heap any other thread may reference or access objects within them. Exclusivity for allocation means an individual thread can allocate efficiently into its TLAB without needing to coordinate or synchronise with any other threads. When a thread runs out of space in its TLAB it requests a new buffer from the JVM’s Garbage Collector. We cover how allocation happens in a TLAB in our related blog post on JVM Allocation Prefetching but in summary, it boils down to incrementing a pointer.

CPU Cache Coherency

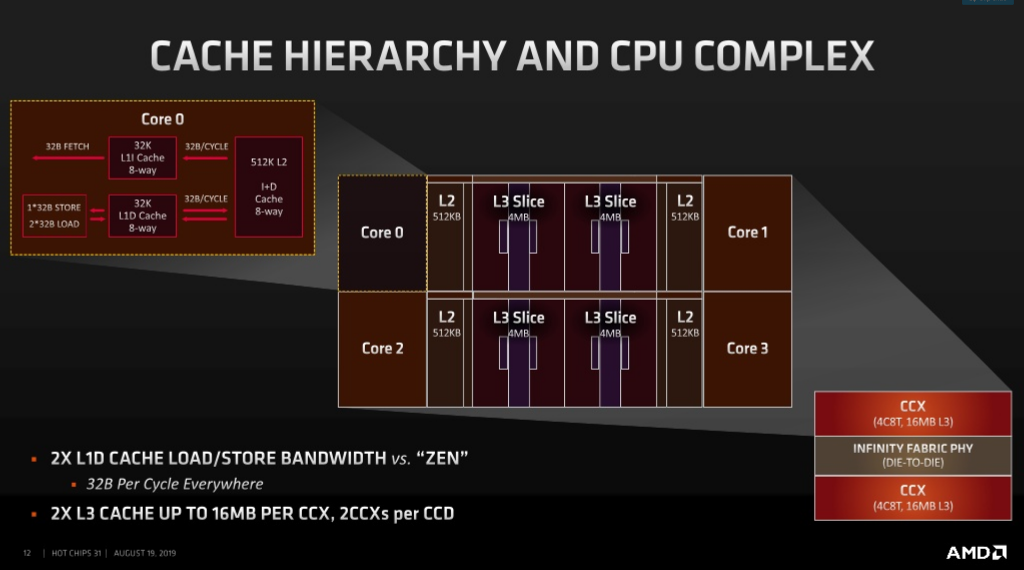

Why are we trying to avoid incrementing the allocation pointer from multiple threads? To understand this we need to look at how caches are organised amongst multiple cores in a multicore processor. Memory in caches is organised in cache lines. These are sequential blocks of normally a fixed size that depends on the CPU architecture and model, on modern AMD/Intel x86 cores this is 64 bytes. There are multiple levels of caches with two or three being usual and with some caches shared between groups or all cores whilst others are exclusive to an individual core. The configuration depends very much on the architecture and specific model of processor. As an example, the AMD’s “Zen 2” Ryzen 7 3700X has 8 cores each of which has a 64kb L1 cache (half for instructions, half for data) and a 512kb L2 cache. The Ryzen 7 cores are in two groups of four, each group of four share a 16MB L3 cache.

AMD’s Zen 2 cache configuration (taken from https://www.slideshare.net/AMD/the-path-to-zen-2)

Keeping all of these caches in sync is what’s called a cache coherency protocol. It specifies a set of states that each cache line can be in and the valid transitions between those states. The most common protocol on x86 is MESI and both Intel and AMD use variants of it. For each cache, their cache lines can be in one of the following states under MESI:

- Modified (M) - this contents of the cache line have been modified and this cache has the only copy. All other caches corresponding lines are marked Invalid.

- Exclusive (E) - this cache has the only copy of this cache line and it matches main memory. All other caches corresponding lines are marked Invalid.

- Shared (S) - this cache line matches memory and is present in other caches, other caches may have this line marked Shared too.

- Invalid (I) - this cache line is empty.

AMD and Intel’s variants (MOESI and MESIF respectively) add additional states that can reduce writes to main memory and also optimise traffic in NUMA environments.

At a very high level, a memory write under MESI goes as follows:

- If the cache line is in present in our cache

- If Exclusive then modify and transition the line to Modified

- If Modified then modify and leave in Modified

- If Shared then make other caches invalidate their copies, modify and move to Modified

- If the cache line is not present in our cache

- If another cache has the line in Modified they send the line contents to this cache and also to main memory, marking their line Invalid

- If other caches have the line in Exclusive or Shared state they send their contents and mark their lines Invalid

As we can see, concurrent memory writes to the same location from multiple cores can involve cache lines being invalidated and changing state fairly rapidly. The term often used for this effect is called cache line ping-pong.

Why caches matter for allocation

The main takeaways from the above steps is that if we have a cache line exclusively or in the modified state then we don’t need to force other caches to do work. If the cache line is shared with other caches we need them to invalidate. Invalidating means those cache lines are now no longer present in other cores’ caches. Subsequent reads could involve cores stalling to re-acquire the data from other caches (50+ cycles depending on which cache and the topology) or worse hitting main memory itself (100s of cycles). This is in contrast with a L1 cache hit which, on the i7 4770k (Haswell) we’ll use later on for benchmarking, is in the region of 4-5 cycles.

Put into the context of memory allocation, every thread competing to read and write a single allocation pointer would lead to each core cache repeatedly needing to take ownership of the allocation pointer’s cache line and all other cores invalidating their copies. In comparison, with TLABs each thread has its own local allocation pointer into its buffer which is not visible to other cores and avoids the need to synchronise with other caches.

Testing allocation with and without TLABs

That’s the theory, let’s see how well it applies in practise. To test this we’re going to conduct some multithreaded benchmarks using Java’s Parallel Garbage Collector on JDK 13.0.2 with and without TLABs.

We’re using the Parallel GC because for all our benchmarks the instructions for manipulating the allocation pointer get inlined into generated code by the JIT. Other collectors like G1 and CMS actually call in to the collectors themselves on allocation which makes things a little harder to demonstrate. Both G1 and CMS also serialize allocations in the non-TLAB case by taking a lock.

For this we will use the excellent JMH and the benchmarks themselves are:

@State(Scope.Benchmark)

public class TlabTest {

@Benchmark

@Fork(jvmArgsAppend = {"-XX:+UseParallelGC","-XX:+UseTLAB"})

@Threads(1)

public Object allocateWithTLABOneThread() {

return new Object();

}

@Benchmark

@Fork(jvmArgsAppend = {"-XX:+UseParallelGC","-XX:-UseTLAB"})

@Threads(1)

public Object allocateWithoutTLABOneThread() {

return new Object();

}

}

It’s worth noting that we’re using Object here which is the smallest allocation we can actually do. This is unrealistic because nobody really allocates like this (if you do, please let me know) but magnifies the effect of the allocation machinery so we can clearly see what effect TLABs have. We’ll look at some benchmarks which allocate larger objects near the end of this post.

We run the benchmarks with the -prof perfasm option so we can dump out the assembly for the two benchmarks. We have simplified and rearranged the assembly below a little to make it easier to read.

For allocateWithTLABOneThread we have:

try_allocation: // Load the current thread-local allocation pointer mov 0x118(%r15),%rax // Compute new thread-local allocation pointer by adding allocation size (16 bytes) mov %rax,%r10 add $0x10,%r10 // Check that this doesn't go beyond the TLAB limit, if it does call in to the GC to refill cmp 0x128(%r15),%r10 // check this is not over our buffer jb continue_allocation .. call in to GC and deal with refilling buffer .. // Allocation was inside TLAB limit, so update limit continue_allocation: mov %r10,0x118(%r15) // Prefetch for next allocation prefetchnta 0xc0(%r10) // prefetch for future allocations .. initialise object with mark word and klass pointer .. .. benchmark loop logic ..

For allocateWithoutTLABOneThread we have:

try_allocation: // Load the current global allocation limit into %r10 movabs $0x7f764804d2d0,%r10 mov (%r10),%r10 increment_allocation_pointer: // Load the current global allocation pointer into %r11 and %rax movabs $0x7f764804d2f8,%r11 mov (%r11),%rax mov %rax,%r11 // Compute new global allocation pointer by adding allocation size (16 bytes) add $0x10,%r11 // Is the new pointer over the limit? If so call in to the GC cmp %r10,%r11 jnb call_gc // Atomic compare-and-swap (CAS) global allocation pointer (address in %r9). Old expected value is in %rax, new value is in %r11 movabs $0x7f764804d2f8,%r9 lock cmpxchg %r11,(%r9) // If the CAS succeeded continue to initialise the object - otherwise retry je continue_allocation // success we can go initialise the object jmp increment_allocation_pointer // failure we need to try again continue_allocation: // New object address in %r11. Increment thread allocations stats and prefetch for the next allocation, then continue with initialisation. addq $0x10,0x190(%r15) // bump thread allocated bytes stats prefetchnta 0xc0(%r11) // prefetch for future allocations .. initialise object with mark word and klass pointer .. .. benchmark loop logic ..

As you can see the case without TLABs is a little more involved and also explicitly needs to deal with contention amongst threads, where one thread might be incrementing the allocation pointer in between another thread having loaded it and attempting to increment it.

The single threaded case

Let’s see how the two single threaded cases stack up on a Intel Core i7-4770 CPU:

| Test | Op/s (throughput) | Error (for 99.9% CI) |

| allocateWithTLABOneThread | 279,772,768 | ± 4,153,915 |

| allocateWithoutTLABOneThread | 101,167,412 | ± 128,394 |

So even with one thread where we’re sure there’s no contention, we’re only seeing half the performance when compared to using a TLAB. This is because the Atomic Compare-And-Swap (CAS) instruction required when not using a TLAB (lock cmpxchg in the above assembly snippet) is more expensive than the normal load and store combination used in the TLAB test.

Digging deeper on Atomic CASes

There are a couple of reasons for this. The first is that, on modern x86 cores, a normal store doesn’t immediately reach the processor cache. It is first written to a “store buffer” and execution can continue while the buffer drains to caches. Subsequent loads from the same address can be retrieved from the store buffer even before the caches are up to date.

With LOCK-prefixed instructions (such as the CMPXCHG in our test) we need to wait until the store involved in the ‘swap’ part of the operation is drained from the buffer otherwise other cores reading from memory could see the older value. This incurs more latency than a normal store as the processor is essentially unable to perform any work or “stalled” while the drain is happening.

The second reason is that LOCK-prefixed instructions go further than just requiring the store from the ‘swap’ to drain to memory, they require all buffered writes to drain to memory and wait for all previous instructions to complete. They also function as reordering barriers and prevent later loads from being executed before them which eliminates some potential optimisations the processor could do. It’s worth pointing out again that this all happens regardless of contention with other cores. These are fundamental fixed costs for an Atomic CAS on a modern x86 processor.

To make diagnosing performance issues easier processors can come with a Performance Monitoring Unit (PMU). These units are able to monitor performance events that occur in the processor. The i7 4770k we’re using for these benchmarks has hundreds of events that are monitorable, nearly all of which can be found in Intel’s guide. Linux’s in-built perf infrastructure has strong support for reading these events and summarising them, which JMH can use to display performance counter information for benchmark runs.

Running the JMH tests again with -prof perfnorm:events=cycles,resource_stalls.sb shows the difference between the two tests:

| Test | cycles/iter | resource_stalls.sb/iter |

| allocateWithTLABOneThread | 19.192 | 0.841 |

| allocateWithoutTLABOneThread | 43.841 | 16.237 |

Without TLAB test iterations take nearly twice as many cycles and the vast majority of those extra cycles are spent stalled waiting on the store buffer to drain. We can pinpoint where this happens by using -prof perfasm:events=resource_stalls.sb to profile based on resource stall events rather than cycles. This shows lock cmpxchg being responsible for ~98% of the stalls.

The multithreaded cases

So we’ve seen that uncontended Atomic CASes are more expensive than normal loads and stores. Let’s look at how things change when there’s contention:

| Test | Op/s (throughput) | Error (for 99.9% CI) |

| allocateWithTLABOneThread | 279,772,768 | ± 4,153,915 |

| allocateWithTLABFourThreads | 533,445,801 | ± 12,529,311 |

| allocateWithoutTLABOneThread | 101,167,412 | ± 128,394 |

| allocateWithoutTLABFourThreads | 11,922,615 | ± 116,838 |

Ouch. Whereas with a TLAB going from one to four threads nearly doubles our allocation throughput, without a TLAB we see our allocation rate drop ~9x going from one to four cores. What’s happening? For this we need to look at the performance counters again:

| Test | cycles/iter | cycle_activity.cycles_no_execute/iter |

| allocateWithTLABOneThread | 21.061 | 4.719 |

| allocateWithTLABFourThreads | 29.153 | 13.333 |

| allocateWithoutTLABOneThread | 45.319 | 23.738 |

| allocateWithoutTLABFourThreads | 1301.448 | 1133.132 |

An absolutely monstrous number of cycles for the four thread test without the TLAB, of the 1,300 cycles.

To start prising apart why this test is taking so long we should try to understand the effects contention can have on performance. The first is at a logical level, we are incrementing the global allocation pointer and then trying to swap the existing value for the new one hoping that no other thread has beat us to it. If they have, we need to redo that work. We can actually quantify how often this happens because there’s conveniently only one atomic load in the test and that’s part of the atomic CAS so we can use the mem_uops_retired.lock_loads performance counter to work out how many times we’ve had to retry.

| Test | mem_uops_retired.lock_loads/iter |

| allocateWithoutTLABFourThreads | 2.732 |

| allocateWithoutTLABOneThread | 1.005 |

As a sanity check, we can see that in the uncontended case there’s only one atomic load per iteration but when we experience contention we’re encountering around 1.7 failed attempts to move the allocation pointer per iteration before we have a success. This explains at least some of the drop in throughput.

The second effect of contention relates to the cache coherency background we went through earlier in the article. When a core goes to CAS the allocation pointer the majority of the time it was not the last thread to successfully do so and this means that the cache line containing the allocation pointer will now be in another core’s cache in the Modified state. For it to be in the Modified state on one core means it must be in the Invalid state in other caches. We can look at a performance counter to see this in action:

| Test | mem_load_uops_l3_hit_retired.xsnp_hitm/iter |

| allocateWithoutTLABFourThreads | 2.235 |

| allocateWithoutTLABOneThread | <0.001 |

The mem_load_uops_l3_hit_retired.xsnp_hitm event tells us when a memory load ends up hitting the cache of another core and that core has the cache line in a Modified state (hence hitm). We see that with only a single thread this almost never happens. With four threads this happens several times an iteration. This ping-ponging of cache lines causes a significant increase in latency for the atomic CAS:

| Test | % of cycles stalled on memory subsystem | % L1 cache hit rate |

| allocateWithoutTLABFourThreads | 88.64% | 77.42% |

| allocateWithoutTLABOneThread | 14.12% | 98.54% |

The vast majority of cycles were spent stalled waiting on the memory subsystem, that is a load or a store with significant latency that prevent the core from progressing and stall execution. We also see a significant drop in the L1 cache hit rate which, by profiling with -prof perfasm:events=L1-dcache-load-misses, we localise to the movabs $0x7f764804d2f8,%r11andlock cmpxchg %r11,(%r9) instructions. These two instructions are responsible for nearly 95% of L1 cache misses, which makes sense as they both are trying to load the same location - the current global allocation pointer.

We said earlier on that using Object for the benchmarks represented the worst case for allocation, as Object is the smallest allocation we can do. Let’s have a quick look at how things look for two other types of allocation: SmallObj (48 bytes) and LargeObj (144 bytes). You can see the code for JMH benchmark here.

| Test | Op/s (throughput) |

Error (for 99.9% CI) |

| TlabTest.allocateWithTLABOneThreadsSmall | 170,904,042 |

2,869,847 |

| TlabTest.allocateWithTLABOneThreadsObj | 273,211,833 |

5,051,955 |

| TlabTest.allocateWithTLABOneThreadsLarge | 55,817,555 |

2,013,760 |

| TlabTest.allocateWithTLABFourThreadsSmall | 172,656,953 |

5,508,839 |

| TlabTest.allocateWithTLABFourThreadsObj | 484,996,880 |

10,024,481 |

| TlabTest.allocateWithTLABFourThreadsLarge | 56,898,809 |

2,192,034 |

| TlabTest.allocateWithoutTLABOneThreadSmall | 84,660,996 |

459,250 |

| TlabTest.allocateWithoutTLABOneThreadObj | 100,806,624 |

173,268 |

| TlabTest.allocateWithoutTLABOneThreadLarge | 48,119,714 |

111,136 |

| TlabTest.allocateWithoutTLABFourThreadsSmall | 11,822,187 |

112,027 |

| TlabTest.allocateWithoutTLABFourThreadsObj | 12,030,722 |

72,315 |

| TlabTest.allocateWithoutTLABFourThreadsLarge | 11,695,500 |

66,945 |

As we can see Object allocations represent the worst case - they show the highest throughput and the biggest drop when removing TLABs. This is because when allocating larger objects the cost of initialisation starts to become a larger factor in performance. That said when encountering contention without TLABs enabled all three benchmarks show roughly similar allocation performance in terms of objects allocated per second - that’s because contention hits the number of allocations not bytes allocated. It’s curious that more initialisation doesn’t lead to fewer failed compare-and-swaps and thus better performance though.

Recap

I think it’s fair to say at this point that if you were thinking of disabling TLABs you should probably reconsider that decision - even in the case where you only have a single thread. We’ve seen how enabling and disabling TLABs changes the generated JIT code for our performance benchmarks and how even uncontended atomic CAS operations can be more costly than a normal load and store. In the contended without-TLAB benchmark we’ve seen the dramatic performance impact of write contention with cache lines being moved and invalidated frequently.

While the examples in this article revolve around the JVM’s TLABs, the conclusions from what we’ve seen are applicable to software design in multithreaded environments. Namely that shared state that is frequently written to by multiple threads should be avoided as it fundamentally limits yours scalability for the reasons we’ve seen earlier. Instead, if possible, aggregate state locally on different threads and then merge it with global state at a later time.

As we advise with all optimisation efforts though, make sure you are optimising the right thing. You need to understand where the real bottlenecks in your application are. These are very difficult to evaluate outside of a production environment.

Acknowledgements

Thanks to Aleksey Shipilëv for the initial suggestion of looking at the effect of write contention via TLABs on the ParallelGC and detailed feedback on this blog post. Thanks to Richard Warburton for reviews and editing.

Sadiq Jaffer

Sadiq Jaffer