How a march to war, Persian poetry and an obscure 1950s psychology technique can help you scale

You might be wondering what the second Gulf War, Persian poetry and 1950s psychology techniques have to do with scaling your platform. In this post we’ll see how each feeds into a technique for better understanding and improving the performance of your software.

On February 12th 2002 when asked by a reporter if there was evidence of a direct link between Baghdad and certain terrorist organisations Donald Rumsfeld said the following:

There are known knowns; there are things we know we know. We also know there are known unknowns; that is to say we know there are some things we do not know. But there are also unknown unknowns—the ones we don't know we don't know. And if one looks throughout the history of our country and other free countries, it is the latter category that tend to be the difficult ones.

While ridiculed at the time for being an eloquent tapdance that avoided answering the question, the statement shed light on a powerful technique used by NASA and the Department of Homeland Security.

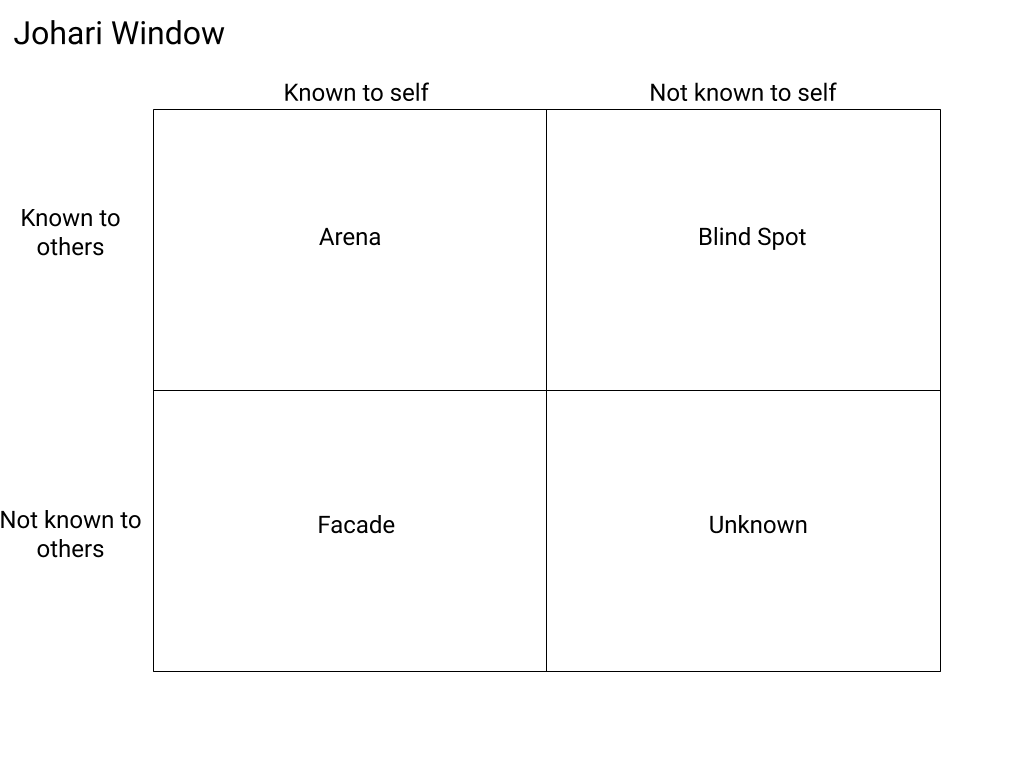

The technique originates from the 1950s from an exercise created by Joseph Luft and Harrington Ingham called the Johari Window. It enables a person to understand how they and others see them by getting them and their peers to pick from a list of adjectives that best describe them. These adjectives are put into a two by two square “window”:

Adjectives that are known to the person at the heart of the exercise and others go on the top left box, those selected by other but not the person in the top right, by the person but not the others in the bottom left and the remaining traits in the bottom right box.

What do the boxes mean?

The Arena are the known knowns, the properties that the subject and their peers know and agree about the subject. The Blind Spot are properties that peers agree on about the subject but that subject doesn’t know. Seeing these can help the subject understand how their peers perceive them. The Façade contains the properties the subject believes they have but which their peers don’t perceive. These could be hidden or they might just be false beliefs. Lastly, the Unknown box houses the list of all adjects that weren’t chosen by the subject or their peers.

This technique was soon extended for use in analysis and planning. When applied to planning, one first breaks down the known facts about the situation. The unambiguous properties we can ascertain for certain ahead of time. The geography of the terrain, the resources at our disposal, the unchanging facts of the situation.

Once we have established those things of certainty - the known knowns - we then tackle the properties we know will impact the outcome but that we don’t have certainty about. The resources competitors may have, the rate at which required technologies will improve, the morale of opposing forces, etc. We then make allowances in the analysis or plan to measure or estimate these known unknowns and adapt the execution accordingly.

Finally, we have the unknown unknowns. The unpredictable properties of the situation that impact our plan but that we have no knowledge of. These are the wrecking balls that could derail even the best laid plans. The financial meltdown in the middle of an election period, the freak weather that decimates your supply lines or the disruptive technology that obviates your industry. The goal of a good analysis is to attempt to uncover as many realistic unknown unknowns and, if possible, turn them in to known unknowns. As known unknowns they can be monitored and potentially mitigated.

Scalability and performance

We understand how these concepts can be used in planning but how do they apply to scalability? Assume we have a service we want to improve the performance of. You begin the performance analysis with our known knowns. These are the facts you know about the service. What language is it written in? What runtime does it use? What libraries does it rely on? How is it structured internally? What JVM version does it use? On what hardware will it be deployed? We build up all the things we unambiguously know about the service.

Next you need to enumerate the known unknowns. These are the properties of the service have some unknown quantity to them. The response times of the services it talks to, the size and latencies of internal queues, the timing of key parts of computation, the relative speeds of memory, disk and network I/O. Anything you know ahead of time that could be an issue but where we’re unsure of the specifics in production. For these we usually add metrics to our own code and rely on tooling to instrument other parts of the system. After all, as Peter Drucker is famous for saying: “If you can't measure it, you can't manage it.”

Unfortunately this is where most production performance monitoring stops. No attempt is made to try to uncover unknown unknowns and in our experience, these make up a large proportion of the performance bottlenecks that systems experience in production.

The logging library set to synchronous blocking application threads while waiting on disk, the misconfigured application server constantly reloading classes, the mistaken blocking networking calls on an asynchronous event loop. All unknown unknowns that you would not think to measure ahead of time.

Uncovering these unknown unknowns is very difficult with metrics and instrumentation. That’s why we’re a big believer in performance profiling in production. By capturing samples of the performance of services in a production environment a whole class of unknown unknowns can be measured and potentially mitigated before they become a real issue. The benefits from profiling in production become even greater if you can do it in continuously - relying on stale data may mean missing new issues resulting from changes to the environment or codebase.

So how should we go about analysing the performance of a service? First start with everything unambiguously known - the known knowns. Next, collect all the known unknowns and measure, log or instrument them. Lastly, explore the system in production to uncover unknown unknowns and convert these to known unknowns which can be measured and further analysed.

Finally, Persian poetry from thirteenth century Persian poet Ibn Yamin:

One who knows and knows that he knows... His horse of wisdom will reach the skies.

One who knows, but doesn't know that he knows... He is fast asleep, so you should wake him up!

One who doesn't know, but knows that he doesn't know... His limping mule will eventually get him home.

One who doesn't know and doesn't know that he doesn't know... He will be eternally lost in his hopeless oblivion!

Don’t end up in hopeless oblivion - explore your production systems and uncover those unknown unknowns!

Sadiq Jaffer

&

Sadiq Jaffer

&

Richard Warburton

Richard Warburton