Java’s -XX:+AggressiveOpts: Can it slow you down?

You may have noticed that Java has a lot of performance related command-line options. One of the most popular ones is -XX:+AggressiveOpts, used in over 20,000 places on Github. In fact when running the benchmarks for our meltdown article we noticed that the Techempower Rapidoid startup script sets the -XX:+AggressiveOpts JVM flag. It sounds like this will speed up your JVM application but what does it actually do and does it really have a beneficial impact? That’s what we investigate in this article.

AggressiveOpts

The man page (for Java 1.8.0_151-b12) says the following:

-XX:+AggressiveOpts

Enables the use of aggressive performance optimization features, which are expected to become default in upcoming releases. By default, this option is disabled and experimental performance features are not used.

So what are these experimental features?

We can take a look at the Hotspot source to work out what they are in Java 8. The two flags are AutoBoxCacheMax and BiasedLockingStartupDelay.

AutoBoxCacheMax

For those less familiar with Java, AutoBoxing is where the compiler automatically converts between primitive and boxed types. E.g int and Integer. Autoboxing is mostly a code readability feature without which there would need to be a lot more casting when mixing primitives and boxed types.

Boxing integers repeatedly can be expensive and since Java’s boxed types are immutable there exists a cache where a configurable range of values are pre-allocated and calls to create one (via Integer.valueOf for example) actually result in a reference to a cached object. Intuitively the mostly commonly used boxed numbers in an application often fall within a common range for example 1-10.

Savvy readers will realise this means that some equal pairs of Integer objects have reference equality (==) and others don’t. You should always use the .equals() method when comparing boxes numeric types in Java.

Byte, Integer and Long all support caching between -128 and 127 but the upper limit of Integers cache is configurable via AutoBoxCacheMax. AggressiveOpts sets the AutoBoxCacheMax to 20000 which means all Integers between -128 and 20000 are cached.

BiasedLockingStartupDelay

In order to understand what BiasedLockingStartupDelay does you need to understand what the biased locking optimisation in Java is.

Biased locking aims to deal with the case where a method or block of code is synchronized but only a single process ever acquires or releases the corresponding locks. This may sound really odd - why would you synchronize something but only call it from one thread? Turns out it’s very common - a lot of code is thread-safe but only used from a single thread.

In this case, biased locking creates an ultra fast path for the process to repeatedly acquire and release those locks that avoids the expensive of a Compare and Swap (CAS) operation. If the lock is requested from a process that is not the current biased process then the lock reverts to being a default lock for a period of time. It may subsequently become rebiased to another thread. More detail can be found on David Dice’s blog.

BiasedLockingStartupDelay sets the amount of time from JVM start before locks are biased. It was originally introduced because biased locking comes with a cost - in that when a lock bias is revoked, the JVM triggers a safepoint. Early JVMs took longer to start up with biased locking enabled immediately and so a delay was added.

Fun fact: from Java 10 the startup delay will be 0 as there now seem to be no performance regressions from doing so.

AggressiveOpts changes the delay from 4 seconds to 500ms. Curious.

Benchmarking Setup and Results

We set up a bare metal (E3-1270v6, 32GB ram) server and installed the openjdk-8-jdk package (Java version 8u151-b12). We then downloaded and installed SpecJVM2008 along with the TechEmpower FrameworkBenchmarks (commit d967f73f8e22a01d8c25d6cb7dd530d9c4e10820)

We ran SpecJVM2008 with no JVM flags and then with -XX:+AggressiveOpts. We then benchmarked the Rapidoid HTTP server JSON endpoint with and without -XX:+AggressiveOpts with wrk (8 threads and 1024 connections for 5 minutes for 5 runs).

SpecJVM2008

| Benchmark | Normal | Aggressive | Change |

|---|---|---|---|

| compiler | 1276.48 | 1248.51 | -2.24% |

| compress | 365.32 | 368.88 | 0.97% |

| crypto | 424.92 | 418.21 | -1.60% |

| derby | 961.28 | 948.66 | -1.33% |

| mpegaudio | 273 | 276.95 | 1.43% |

| scimark.large | 85.42 | 87.61 | 2.50% |

| scimark.small | 600.76 | 599.55 | -0.20% |

| serial | 290.7 | 293.43 | 0.93% |

| startup | 59.99 | 59.02 | -1.64% |

| sunflow | 154.34 | 153.66 | -0.44% |

| xml | 1030.71 | 1035.76 | 0.49% |

| composite | 341.65 | 341.33 | -0.09% |

Rapidoid

| Normal | Aggressive | Change | |

|---|---|---|---|

| Average requests/second | 834,581 | 811,091 | -2.90% |

| Standard Deviation | 12,414 | 11,117 |

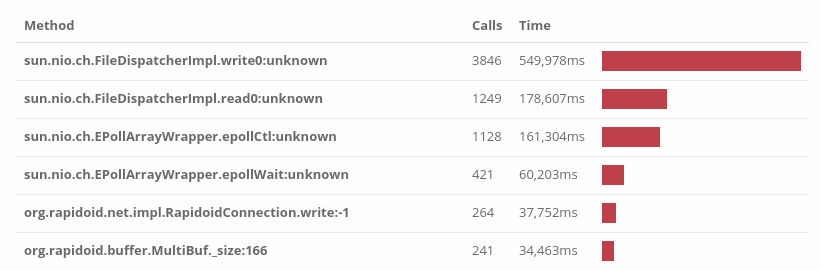

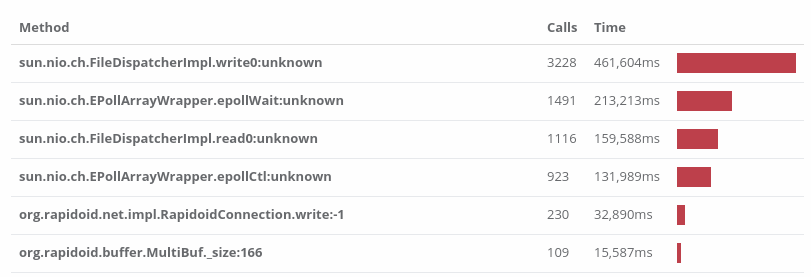

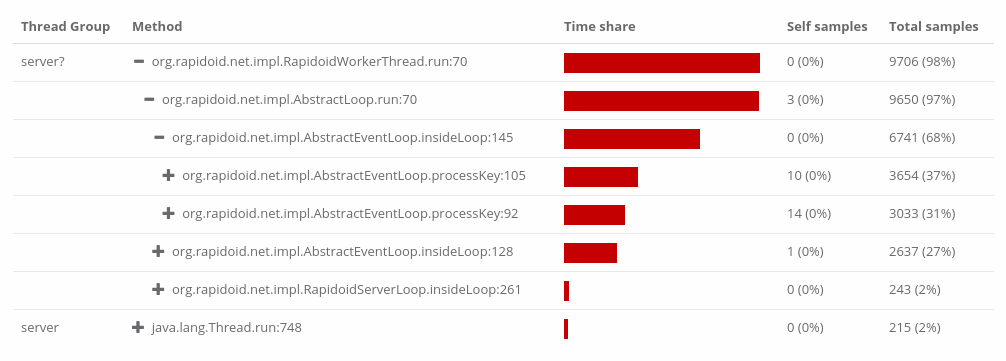

The hotspots from Opsian for the normal runs and aggressive runs respectively:

As you can see from the hotspots images - both the normal and aggressive runs have pretty similar hot paths through their codebase.

What was the impact?

From the SpecJVM2008 results, there’s virtually no difference when comparing composite scores though there are small variations between individual tests.

However it look like -XX:+AggressiveOpts causes a slight decrease in Rapidoid throughput. It seems unlikely to be related to autoboxing, a preliminary pass over the hot methods in the Opsian tree view showed no cases where autoboxing would take place. This probably also rules out some weird interaction between the autobox cache and other autoboxing-related optimisations (like EliminateAutoBox and DoEscapeAnalysis).

As each test run was five minutes, it also seems unlikely there would be a material impact from reducing the bias lock startup delay but again there may be negative interactions with other optimisations that aren’t immediately obvious.

Takeaway: Check your flags

The results provide an important lesson in performance testing the flags you use. AggressiveOpts provides no benefit in SpecJVM2008 and actually leads to a slight decline in performance for Rapidoid’s JSON test. Often these flags are set with no performance comparison undertaken. This isn’t just poor practice - it can often slow your application down.

Not only that but as the JVM evolves the defaults may change in a beneficial way, for example switching the biased locking startup delay to 0 in Java 10. By retaining legacy flags you make it less likely to get the benefits of newer, faster features in released JVMs.

Sadiq Jaffer

&

Sadiq Jaffer

&

Richard Warburton

Richard Warburton